Meta-Embeddings: Higher-quality word embeddings via ensembles of Embedding Sets

(Source: Google Pictures)

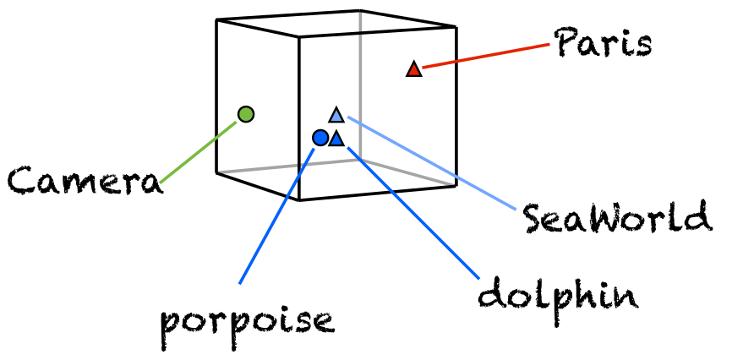

We release our meta-embeddings described in (Yin and Schütze ACL2016) that performs ensembles of some pretrained word embedding versions (available online). Our meta-embeddings have higher-quality and bigger vocabulary than each component version.

In this page, you can find two versions of meta-embeddings: "1TON" and "1TON_plus", and each version has two files: "overlap" and "oov". "overlap" means meta-embeddings for overlapping vocab of all component pretrained versions, "oov" file contains meta-embeddings for remaining words which are in the vocab union but not covered by all versions.

In Table 3 of the paper, we used "overlap" meta-embeddings.

Reference:

ACL'2016, Wenpeng Yin and Hinrich Schütze. Learning Word Meta-Embeddings

(bib)

Contact: Wenpeng Yin (mr.yinwenpeng@gmail.com)